A Practical Guide to Modern Trust and Safety

At its core, trust and safety is about one thing: protecting the people who use your platform from harm. It's the dedicated practice of shielding them from bad actors, abusive content, and deceptive behavior.

This isn't just about a single team or a piece of software. It’s the entire ecosystem of policies, human expertise, and technology working together to make your online space a safe and reliable place to be.

What Trust and Safety Really Means Today

Think of any online community—a social network, a marketplace, a collaborative tool—as a digital neighborhood. Your trust and safety program acts as its essential civic services. The policies are the local laws, the moderation teams are the neighborhood watch, and the incident response protocols are the emergency services.

Without this structure, the neighborhood would quickly become a chaotic, untrustworthy place nobody wants to visit. This function has moved far beyond just deleting spam. It’s now a sophisticated, multi-layered defense system that is absolutely critical to protecting users and, by extension, the company's reputation.

From Simple Spam to Synthetic Threats

Not long ago, the main headaches were things like account phishing, obvious spam, and unwanted explicit content. While those problems haven't gone away, the threats we face now are orders of magnitude more complex. Malicious actors are deploying sophisticated tools and coordinated strategies to launch attacks that are deliberately designed to evade detection.

The game changed completely with generative AI.

Nowhere is this more apparent than with synthetic media. The danger of deepfakes, for instance, is profound. Research shows that humans can spot high-quality video deepfakes with a dismal 24.5% success rate. This creates a massive vulnerability that can only be plugged with specialized, automated AI detection. The market is racing to keep up, with the Deepfake Detection Market projected to skyrocket from USD 114.3 million in 2024 to USD 5,609.3 million by 2034.

The Four Pillars of a Modern Trust and Safety Program

Building a resilient T&S program is no longer a "nice-to-have"—it's a fundamental business requirement. A modern framework is built on four interconnected pillars that combine proactive strategy with reactive defense. These components work in concert to establish clear standards and maintain accountability, as seen in evolving organizational approaches to Security, Trust, and Accountability at Legal Chain.

The table below outlines these four essential pillars and their objectives.

| Pillar | Core Function | Key Objective |

|---|---|---|

| Policy | Establishing the rules of engagement for the platform. | Create clear, fair, and enforceable guidelines that define acceptable user behavior and content. |

| Moderation | Enforcing the rules at scale. | Combine human review and automated systems to consistently identify and act on policy violations. |

| Incident Response | Managing crises and emerging threats. | Quickly detect, contain, and neutralize urgent threats to prevent widespread harm and system abuse. |

| Transparency & Reporting | Building user confidence and accountability. | Communicate actions, policy changes, and safety metrics to users and stakeholders to foster trust. |

These pillars are not independent silos; they are deeply interconnected. Strong policies are useless without effective moderation, and a great incident response plan needs transparent communication to rebuild trust after an event.

Ultimately, a well-executed trust and safety initiative creates an environment where people feel secure enough to connect, create, and transact. That feeling of safety is what drives sustainable growth and builds lasting user loyalty.

The Evolving Threat Landscape: From Spam to AI Synthetics

Not too long ago, trust and safety teams were the digital equivalent of bouncers at a club, mostly focused on kicking out the obvious troublemakers—spam bots, blatant scams, and clear-cut policy violations. But that was a different era. Today, the job is less like a bouncer and more like a counter-intelligence agent operating in a high-stakes, constantly shifting landscape.

The bad actors have gotten smarter, their tools more powerful. This evolution demands a complete rethinking of how we protect our platforms and our users.

The spectrum of risks we face today is incredibly broad. We're still fighting the classic battles against things like hate speech and harassment, but the scale and intensity have been dialed up to eleven. At the same time, we're dealing with highly coordinated financial fraud schemes that are more sophisticated than ever before.

But the real game-changer, the threat that has completely rewritten the rulebook, is the explosion of synthetic media. What once felt like science fiction is now a daily operational reality, and it’s creating a whole new category of threats that can slip right past our old defenses.

The Rise of Synthetic Media Threats

When we talk about synthetic media, we’re talking about content powered by generative AI—things like deepfakes, AI-generated video, and eerily realistic voice clones. These aren't just your cousin's clumsy Photoshop edits. They are entirely new, artificially created assets designed to be indistinguishable from the real thing. And the technology has put the power of mass deception into the hands of anyone with a decent computer.

This isn't some far-off problem looming on the horizon. It's here, right now, and it's hitting businesses and individuals hard.

- Financial Fraud: Scammers are using deepfake video and audio to impersonate executives in "CEO fraud" schemes, tricking finance departments into wiring away millions.

- Disinformation Campaigns: Hostile actors are deploying hyper-realistic (but completely fake) videos of political leaders to stir up chaos, interfere with elections, and chip away at public trust.

- Personal Exploitation: Malicious individuals are using non-consensual deepfake pornography as a devastating weapon to harass, intimidate, and inflict severe emotional and reputational harm.

- Brand Sabotage: Imagine a competitor creating a convincing fake video of your CEO making a racist comment or your flagship product exploding. It's a brand nightmare that can tank your stock value overnight.

The scary part isn't just the sophistication of these fakes; it's their scalability. A single person can now churn out thousands of unique pieces of synthetic content, completely overwhelming any moderation team that relies on human review.

The old playbook of looking for blurry edges or weird glitches to spot a fake is officially dead. Modern deepfakes are often seamless, making them nearly impossible for the human eye to catch. This new reality demands a technological response.

The numbers back this up. In 2023, a staggering 65% of businesses across the globe reported dealing with security incidents involving deepfakes. This isn't a niche issue anymore; it's a mainstream threat. It's no surprise, then, that the market for AI Deepfake Detectors is projected to explode, growing from USD 170 million in 2024 to an estimated USD 1,555 million by 2034. For a deeper dive into these figures, you can find more insights on AI deepfake detector market growth at intelmarketresearch.com.

Why Traditional Trust and Safety Fails

Here's the hard truth: our traditional trust and safety models were built for a world that no longer exists. They were designed to catch known patterns of abuse—flagging specific keywords in a hate speech lexicon or blocking a blacklisted IP address known for spam.

Synthetic media doesn't play by those rules. Each deepfake is a unique digital creation. It doesn't have a consistent, repeatable signature that a static, rule-based system can learn to recognize. It's like trying to catch a master of disguise who changes their entire identity for every job. Our old methods simply can't keep up.

On top of that, there's the issue of speed. A malicious deepfake can go viral in a matter of minutes, causing irreversible damage long before a human moderator even sees it in their queue. This massive gap in speed and scale makes a proactive, technology-first approach the only viable path forward for any modern trust and safety program. It's also why many organizations are turning to new tools that can help identify AI-generated content without invading user privacy. To learn more about this, check out our review of tools designed to spot undetectable AI.

Building Your Trust and Safety Playbook From Scratch

Alright, so you understand the threats. Now, how do you actually build a defense? This is where your trust and safety playbook comes in. Think of it as your organization's single source of truth for handling platform abuse—the definitive guide that turns good intentions into concrete, repeatable actions.

It's not just another corporate document collecting dust. A good playbook is like a city's emergency response plan. It lays out the laws (your policies), the procedures for first responders (your moderators), and the communication strategy to keep everyone calm and informed when a crisis hits. Without one, you're just winging it, and your response to incidents will be chaotic, inconsistent, and will ultimately chip away at the very user trust you're trying to build.

To create a playbook from the ground up, you need to focus on three core pillars: setting the rules (policy), enforcing them (moderation), and handling the inevitable fires (incident response).

Crafting Clear and Enforceable Policies

Your community guidelines are the absolute foundation of your entire trust and safety operation. It’s simple: if your policies are vague, contradictory, or impossible for a normal person to understand, your moderation efforts are doomed from the start. The goal here is to be both comprehensive and crystal clear.

This means going way beyond just listing things people can't do. You have to anticipate the gray areas and give specific examples that leave no room for doubt. For instance, a lazy policy says "No harassment." A strong policy defines what harassment actually looks like on your platform—things like targeted insults, coordinated dogpiling, or sharing someone's private information without their consent.

A well-crafted policy framework always includes:

- Explicit Definitions: Don't assume users know what you mean by "hate speech" or "misinformation." Spell it out with concrete examples that apply directly to your platform.

- Actionable Guidelines: Be transparent about the consequences. A good penalty system should scale based on the severity and frequency of the violation.

- A Clear Appeals Process: People make mistakes, and so do your systems. Giving users a straightforward way to appeal a decision shows fairness and, just as importantly, helps you spot weaknesses in your own enforcement.

Choosing Your Content Moderation Strategy

Once your rules are set in stone, you have to decide how you're going to enforce them. This is where content moderation comes in—it's the operational arm of your playbook, the team on the front lines reviewing content and taking action. You've basically got three models to choose from, each with its own pros and cons.

1. Human Moderation: This is exactly what it sounds like—teams of trained people manually reviewing flagged content. Humans are fantastic at picking up on nuance, sarcasm, and cultural context that an algorithm would completely miss. The downside? It's slow, incredibly expensive to scale, and can take a serious psychological toll on the reviewers.

2. Automated Moderation: Here, you're using AI and machine learning to automatically flag or remove content. Automation is a beast—it’s lightning-fast and can chew through massive volumes of content, making it perfect for clear-cut violations like spam or graphic violence. But algorithms are literal. They can lack contextual understanding, leading to false positives (nuking perfectly fine content) and false negatives (letting harmful stuff slip through).

3. Hybrid Moderation: This approach is the sweet spot for most platforms. It combines the raw power of automation with the careful judgment of humans. The automated systems handle the easy, high-volume takedowns, while flagging the tricky, borderline cases for a person to review. This frees up your human experts to focus their energy where it's needed most, creating a system that's both efficient and intelligent.

A winning moderation strategy isn't just about taking down bad stuff; it's about protecting the good. The goal is surgical precision—minimizing harm to users while fiercely protecting their freedom of expression.

As you build your playbook, remember that effective digital asset management best practices for maintaining trust and safety are essential for organizing the massive amounts of user-generated content and internal documents your team will handle.

Designing an Incident Response Framework

Let's be realistic: no matter how good your policies and moderation are, major incidents are going to happen. An incident response framework is your step-by-step guide for what to do when things go sideways. It’s your "break glass in case of emergency" plan.

A solid framework is what separates a calm, coordinated response from outright panic. It walks your team through every critical step and usually follows a few key phases:

- Detection and Triage: How do you even know a crisis is brewing? Who gets the first call and decides how bad it really is?

- Containment and Escalation: What are the immediate first aid steps to stop the bleeding? Who needs to be woken up at 3 a.m., and when?

- Investigation and Resolution: Time to play detective. You need a root cause analysis to figure out what actually happened, followed by a clear plan to fix it.

- Communication: How do you talk to your team, your executives, and your users? Transparency and timeliness are everything here.

- Post-Mortem Analysis: After the dust settles, you have to ask the hard questions. What did we learn? And how do we update our policies, tools, and processes so this never, ever happens again?

This structured process ensures you not only survive the crisis but actually learn from it, making your entire trust and safety ecosystem stronger for the next time.

Integrating Technology for a Proactive Defense Strategy

Trying to fight AI-generated threats with human reviewers is like trying to stop a tidal wave with a bucket. It's just not going to work. The sheer volume, speed, and sophistication of synthetic media make it mathematically impossible for human teams to keep up. This is where a proactive defense, powered by the right technology, becomes the absolute core of any modern trust and safety operation.

The old playbooks focused on spotting obvious visual glitches just don't cut it anymore. Today’s threats demand a layered, automated approach that can analyze content at a scale and depth far beyond what any person could manage.

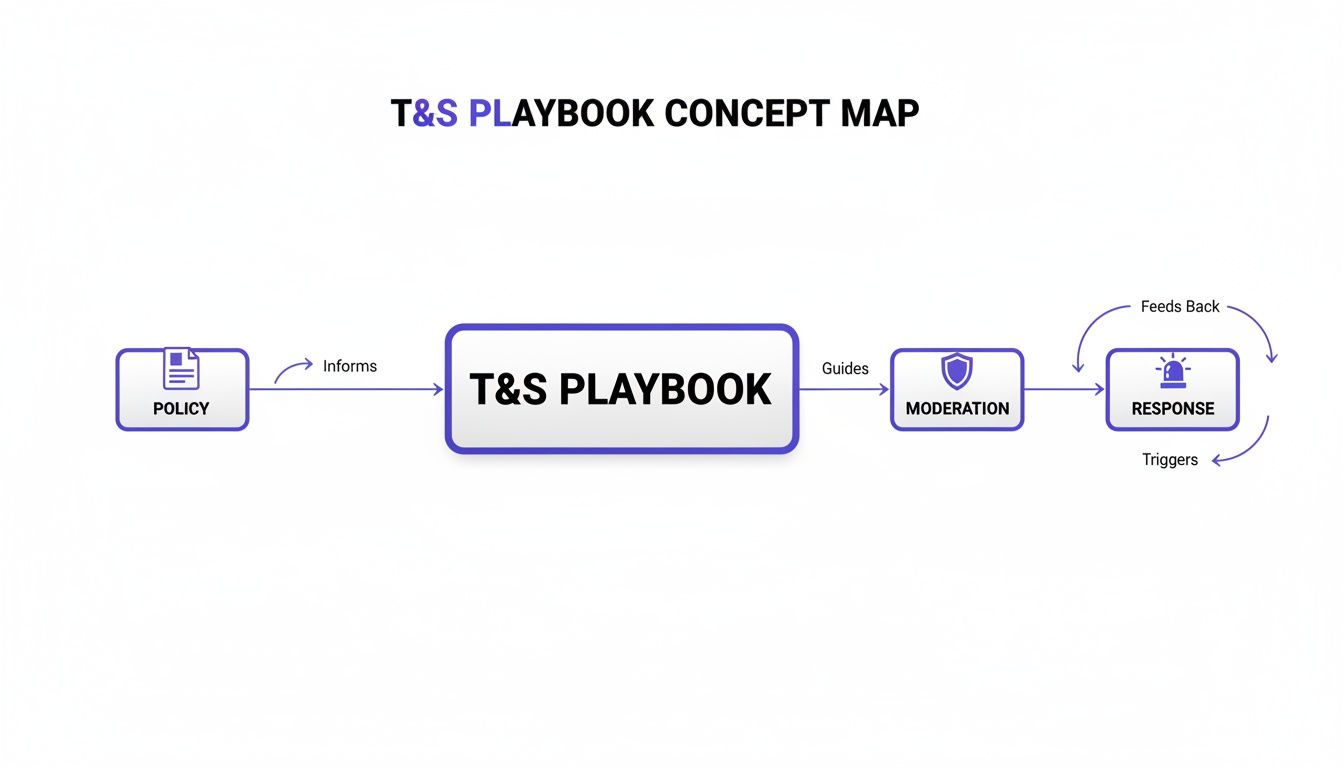

This is how it all fits together—policy guides your playbook, which in turn directs both your everyday moderation and your critical incident response.

A solid playbook, built on clear policies, is the engine that drives everything.

The Power of Multi-Signal Detection

The most effective tools out there are built on a principle called multi-signal detection. Think of it like a detective investigating a crime scene. A good detective doesn't just look for one clue; they dust for fingerprints, analyze DNA, check security footage, and interview witnesses. Any single piece of evidence might be shaky, but when you put them all together, they paint a clear picture of what really happened.

AI detection tools do the exact same thing. They hunt for multiple, distinct digital fingerprints left behind during the AI generation process. Instead of betting everything on spotting one potential flaw, they cross-reference dozens of signals to build a high-confidence verdict on whether a video is real or fake. This drastically reduces the risk of getting it wrong—both false positives and false negatives.

How Advanced Detection Technology Actually Works

So, what does this look like in practice? Let's break down how a tool like an AI Video Detector uses a multi-signal approach to dissect a piece of media, combining several forensic techniques into one lightning-fast analysis.

- Frame-Level Analysis: The system scans every single frame, looking for microscopic artifacts and pixel-level inconsistencies invisible to the naked eye. It’s essentially hunting for the "fingerprints" left by the specific AI models used to create the fake.

- Audio Forensics: It's not just about what you see. The tool also scrutinizes the audio track for tell-tale signs of synthesis, like unnatural sound frequencies, spectral anomalies, or the subtle artifacts common in AI-cloned voices.

- Temporal Consistency Checks: This is about analyzing how things change over time. It spots illogical motion or physical impossibilities. An AI might generate a flawless-looking face, but it often struggles with how that face moves, blinks, or interacts with the environment in a physically coherent way.

To truly grasp the gap between human and machine capabilities, consider this comparison:

Manual Review vs. Automated Detection for Synthetic Media

| Feature | Manual Review | Automated Multi-Signal Detection (e.g., AI Video Detector) |

|---|---|---|

| Speed | Slow; takes minutes or hours per video | Extremely fast; analyzes files in seconds or minutes |

| Scale | Very limited; easily overwhelmed by volume | Nearly infinite; can process thousands of files simultaneously |

| Accuracy | Highly variable and prone to human error | Consistently high accuracy by cross-referencing dozens of invisible signals |

| Sophistication | Can only spot obvious, visible flaws | Detects subtle, microscopic artifacts left by advanced AI generation models |

| Cost-Effectiveness | Very expensive at scale due to labor costs | Highly cost-effective; reduces the need for large teams of manual reviewers |

| Wellbeing Impact | Exposes moderators to potentially harmful content | Shields humans from the initial wave of harmful content, allowing them to focus on complex edge cases |

| Audio Analysis | Limited to what the human ear can perceive | Conducts deep forensic analysis of audio spectrograms and frequencies |

| Consistency | Subjective; depends on individual moderator skill | Objective and standardized; delivers consistent results based on data |

The table makes it clear: while human judgment is invaluable for nuanced policy decisions, relying on it for initial detection is a losing battle. Automation is the only viable path forward for identifying synthetic media at scale.

Why Privacy-First Tools are a Game-Changer

For many organizations, the idea of uploading sensitive videos to a third-party server is a complete non-starter. Think about newsrooms vetting whistleblower footage, legal teams handling confidential evidence, or a company investigating internal fraud. The risk is just too high.

This is where a privacy-first architecture makes all the difference. The best detection tools are now built to perform a complete analysis without ever permanently storing the user's video. The file is processed in a secure, temporary environment and is permanently wiped the moment the results are delivered.

This approach gives you the best of both worlds: powerful, accurate detection to protect your platform without compromising your data security or privacy obligations. It removes a massive barrier to entry and lets teams handling high-stakes content integrate this critical technology with confidence.

By embracing these kinds of advanced, privacy-conscious tools, a trust and safety program can finally shift from a reactive, defensive posture to a proactive and resilient one. If you want to dive deeper into the technical side, you can learn more about forensic video analysis software and the methods that make it work.

How to Know if Your Trust and Safety Efforts Are Actually Working

So, you're putting in the work to keep your platform safe. But how do you know if it's paying off? It's a surprisingly tough question to answer. If you just count the number of accounts you ban or posts you take down, you're only seeing a sliver of the picture. A massive takedown number could mean you're doing a great job, or it could mean your platform is drowning in harmful content.

To really get a handle on it, you have to look past those simple volume numbers. You need to focus on key performance indicators (KPIs) that give you a complete health report for your platform. This approach shifts trust and safety from a reactive, fire-fighting department into a proactive team that builds user confidence and defends your brand. The right metrics don’t just tell you what happened; they help you see what’s coming.

Key Performance Indicators for a Healthy Platform

Zeroing in on the right KPIs is everything. These metrics shouldn't just track the volume of bad stuff, but also how fast and how accurately you're dealing with it. They give you a balanced perspective, showing you what the user experience is like while also grading your own team's performance.

Here are a few essential KPIs every T&S team should have on their dashboard:

- Prevalence of Violative Content (PVC): This is the big one. It measures how much harmful content your users actually see before you can get to it. A low PVC is a strong signal that your proactive detection systems are on point.

- Time to Action (TTA): How long does it take your team to respond once content is flagged? A faster TTA, especially for serious threats, directly minimizes the harm people are exposed to.

- User Reporting Accuracy: What percentage of user reports actually result in you taking action? This KPI tells you how well your users understand your rules and can shine a light on policies that might be confusing.

- Moderator Accuracy Rate: How consistently are your moderators—both human and AI—applying the rules? High accuracy is fundamental for being fair and earning user trust.

Think of these metrics like a doctor checking a patient's vital signs. A single number might not tell you much, but looking at them together gives you a complete picture of health and helps you spot problems before they become emergencies.

Turning Data Into Better Decisions

Gathering all this data is just step one. The real magic happens when you use it to make smarter calls on your policies, tools, and training. Your KPIs should be the engine driving your strategy, helping you put your resources where they’ll have the biggest impact.

For instance, if your Time to Action suddenly skyrockets for a specific type of violation, that's a huge red flag. It might mean your automated models are falling behind or your human team needs better training on a new threat. If User Reporting Accuracy is in the gutter, it’s a clear sign you need to make your community guidelines easier to understand or improve the reporting flow itself.

It’s all about creating a feedback loop. Microsoft’s T&S teams, for example, acted on nearly 245,000 ads following complaints but also reversed 1.5 million ad rejections after advertisers appealed. This data isn't just a report card; it's a roadmap that helps you constantly fine-tune your systems to build a platform people feel safe using.

Navigating the Legal and Ethical Maze of T&S

Trust and safety decisions are never made in a vacuum. They exist at the messy intersection of global laws, cultural norms, and a company's own ethical compass. Every T&S team has to walk this tightrope, carefully balancing user protection against fundamental rights like freedom of expression. This job gets even tougher when you consider the patchwork of international regulations that often contradict each other.

What one country considers dangerous misinformation might be seen as protected political speech just across the border. This creates a massive operational headache for any global platform. The goal is to create nuanced policies that can adapt to different legal environments without feeling inconsistent or arbitrary to users around the world.

The Gray Areas of Content Moderation

The easy calls aren't the problem. The real work in trust and safety happens in the gray areas—the controversial, often upsetting, but technically legal content. How do you define hate speech in a way that respects cultural context? Where do you draw the line between a harmful conspiracy theory and legitimate dissent?

These aren't just philosophical questions; they are daily operational challenges. Get them wrong, and the consequences are serious. Being too aggressive can alienate users and lead to accusations of censorship. Being too permissive allows harmful content to spread, eroding the safety and trust of your platform.

This is exactly why a strong ethical framework—one that’s informed by the law but not limited by it—is so essential. It acts as a compass when the law alone doesn't give you a clear direction.

A core ethical principle should always be preventing real-world harm. This means that even if a piece of content is technically legal, a T&S team must weigh its potential to incite violence, harassment, or a public health crisis before making a final call.

Building Trust Through Transparency and Fairness

In this complex environment, transparency isn't just a "nice-to-have." It’s a fundamental requirement for building and keeping user trust. People are far more likely to accept a decision they disagree with if they understand the rules and believe the process was fair. This is where transparency reports and a solid appeals process become your most important tools.

Here’s how they create a healthier ecosystem:

- Transparency Reports: Regularly publishing data on content removals, account suspensions, and government requests shows you’re accountable. It offers hard evidence that you're committed to the safety goals you've publicly stated.

- Fair Appeals Processes: No moderation system is perfect. Giving users a clear and accessible way to appeal a decision shows respect. It also creates a vital feedback loop that helps you improve moderator accuracy and make your policies clearer over time.

For example, Microsoft once reported it overturned 1.5 million ad rejections after advertisers appealed, which just goes to show how these systems are crucial for correcting errors. By giving users a voice and being open about your actions, you can prove your trust and safety efforts are principled, not punitive. Improving these systems often starts with foundational knowledge; you can explore this further in our guides on developing effective media literacy lesson plans to help users better navigate the digital world.

Answering Your Top Trust and Safety Questions

When you're building or scaling a trust and safety program, a lot of questions come up. Here are some straight answers to the most common ones we hear from organizations just like yours.

Where Should a T&S Team Sit in an Organization?

Honestly, there's no single "right" place for a Trust and Safety team. The best fit really depends on your company's DNA.

We often see T&S teams report up through Legal, Operations, or even Product. Tucking it under Legal keeps the team tightly aligned with regulatory demands and compliance. Placing it in Operations puts the focus on scaling moderation and creating efficient workflows. And when T&S lives within Product, safety can be designed into features from the ground up, which is a huge advantage.

How Much Should We Invest in Trust and Safety?

Your investment should grow with your user base and the level of risk you face. A small startup might get by initially with a single person setting policy and using basic moderation tools.

But for a large platform, it's a different story. It’s not uncommon to see them dedicate 2-5% of their total operational budget to T&S. That budget covers everything from global moderation teams and specialized tech (like a deepfake detector) to dedicated legal support. The key is to stop thinking of T&S as a cost center and start seeing it for what it is: a core business function.

Think of it this way: the cost of a single major safety incident—in user trust, brand damage, and regulatory fines—will almost always dwarf what you would have spent on proactive T&S investment.

Can We Just Outsource All of Our Content Moderation?

Outsourcing can be a smart move for handling large volumes of straightforward policy violations, and it's often budget-friendly. But relying on it completely is a mistake.

Your in-house team needs to keep a firm grip on the reins. They should be the ones creating policy, running quality control, and handling the most sensitive, complex cases that require deep context. A hybrid model is usually the most resilient approach for a modern trust and safety operation—let vendors manage the first line of defense, but escalate the tough stuff to your internal experts.